ClickHouse需要分区吗?ClickHouse到底有多快?

目录

ClickHouse需要分区吗?

ClickHouse分区背景

在大数据常规的认知中,分区能减少表数据的扫描进而加快查询速度,然在ClickHouse中其实并不是这样。我在生产环境中多分区写入这里得到了如下的报错:

|

1 2 3 4 5 6 7 8 |

DB::Exception: Too many partitions for single INSERT block (more than 100). The limit is controlled by 'max_partitions_per_insert_block' setting. Large number of partitions is a common misconception. It will lead to severe negative performance impact, including slow server startup, slow INSERT queries and slow SELECT queries. Recommended total number of partitions for a table is under 1000..10000. Please note, that partitioning is not intended to speed up SELECT queries (ORDER BY key is sufficient to make range queries fast). Partitions are intended for data manipulation (DROP PARTITION, etc).. |

这里:“that partitioning is not intended to speed up SELECT queries (ORDER BY key is sufficient to make range queries fast). Partitions are intended for data manipulation (DROP PARTITION, etc)”,意思是分区并不能加快查询速度,而是用做数据表的分离和附加数据使用,也就是用于更新或者删除数据用。

ClickHouse分区生产方案

- 我们生产环境离线数据更新周期一般为T+1,极少为Hour,且ClickHouse不推荐分区大于1000;

- ClickHouse删除分区的动作,其实是异步的高频次delete操作过于沉重。官方推荐DETACH卸载再做删除。

所以我们这里结合实际业务场景并没有采用分区的方法去更新数据,而是参考ElasticSearch热更新的方案,采用了增量/全量滚动更新的方式,具体为:

ClickHouse对于分区的理解和操作

ClickHouse到底有多快?

测试环境

微软云,b系列, 2H4G, 持续IO不到30M,两分片两副本ClickHouse集群

创建本地表

|

1 2 3 4 5 6 7 8 9 10 11 12 |

create table cppla.fenqu_local_table on CLUSTER 'company_cluster'( id UInt64, phone UInt64, s1 String, s2 String, s3 String, s4 String, s5 String, s6 String, date String )engine=ReplicatedMergeTree('/clickhouse/tables/{shard}/fenqu_local_table', '{replica}') order by(id, date); |

创建分布式表

|

1 2 3 4 5 6 7 8 9 10 11 |

create table cppla.fenqu_distribute_table on CLUSTER 'company_cluster'( id UInt64, phone UInt64, s1 String, s2 String, s3 String, s4 String, s5 String, s6 String, date String )engine = Distributed(company_cluster,cppla,fenqu_local_table, rand()); |

脚本写入1亿数据

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

# coding: utf-8 import time import random from xxx import clickhouse_client def sranddate(): a1 = (2020, 1, 1, 0, 0, 0, 0, 0, 0) a2 = (2020, 12, 31, 23, 59, 59, 0, 0, 0) start = time.mktime(a1) end = time.mktime(a2) t = random.randint(start, end) date_touple = time.localtime(t) date = time.strftime("%Y-%m-%d", date_touple) return date def ck(): sql = ''' insert into cppla.fenqu_local_table_partition(id,phone,s1,s2,s3,s4,s5,s6,date) values ''' a = [] count = 0 for i in range(1, 100000000): a.append(( i, i, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, (random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')+random.choice('abcdefghijklmnopqrstuvwxyz')) * 12, sranddate(), )) if len(a) == 1000000: clickhouse_client.exec_sql( sql, a ) a=[] count += 1 print('send: 100w, count: %d' % count) if count >= 20: time.sleep(180) else: time.sleep(60) if len(a)!=0: clickhouse_client.exec_sql( sql, a ) if __name__ == "__main__": ck() |

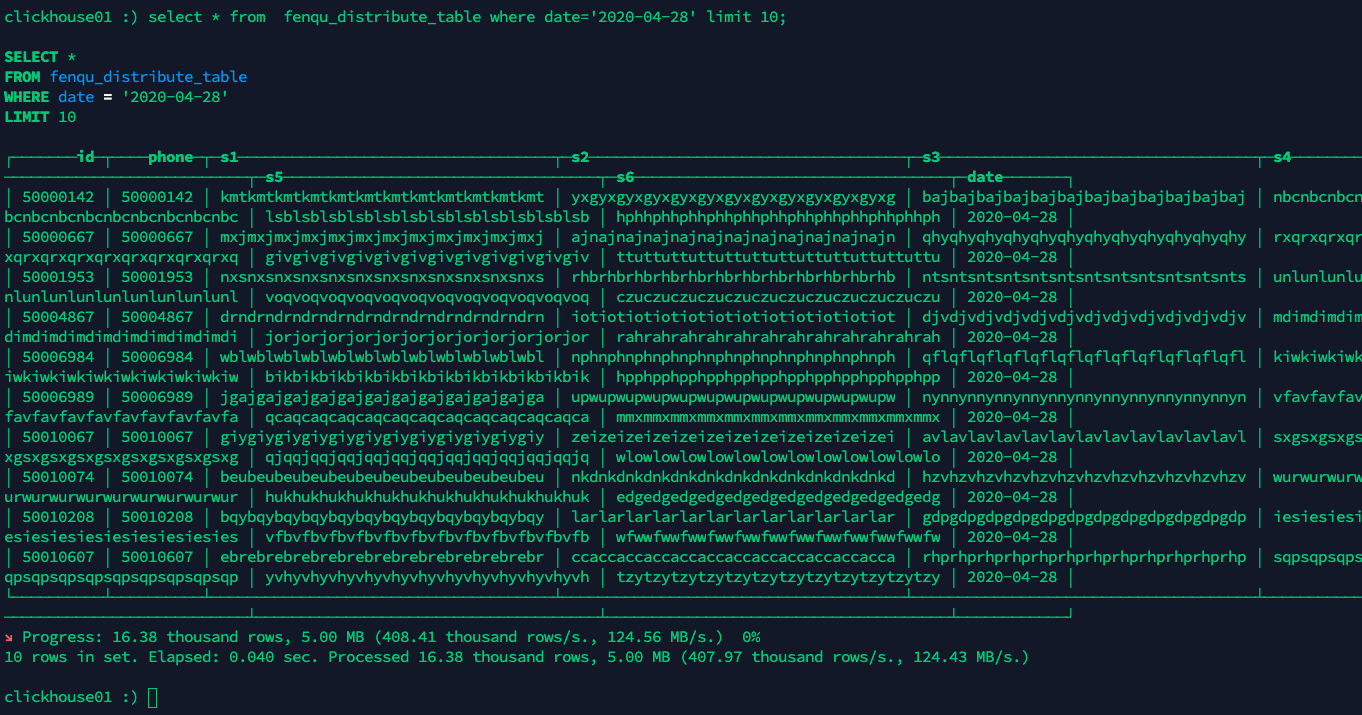

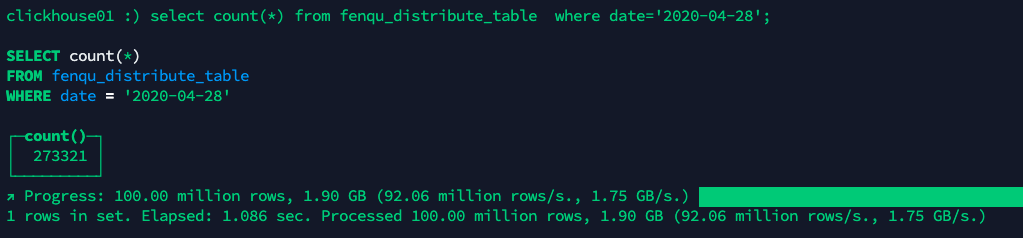

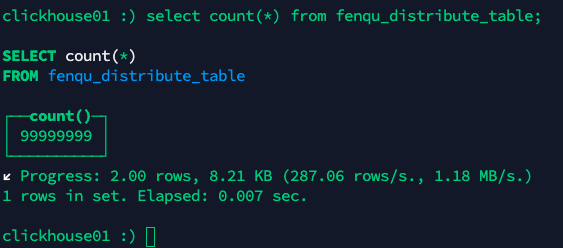

ClickHouse速度测试

查询总数据量

查询某一天数据

查询某一天数据总量

稀疏索引骚啊

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

clickhouse01 :) select count(*) from fenqu_distribute_table where date='2020-12-01'; SELECT count(*) FROM fenqu_distribute_table WHERE date = '2020-12-01' ┌─count()─┐ │ 272507 │ └─────────┘ ↙ Progress: 100.00 million rows, 1.90 GB (87.79 million rows/s., 1.67 GB/s.) █████████████████████████████████████████████████████████████████████▊ 98% 1 rows in set. Elapsed: 1.139 sec. Processed 100.00 million rows, 1.90 GB (87.79 million rows/s., 1.67 GB/s.) clickhouse01 :) |

ClickHouse写入本地表注意事项

- 间歇性大批量写入,例:即写入10-100w,然后sleep,循环即可。

- 而非小批量持续写入,例:持续循环写入1w。

ClickHouse创建表注意事项

更新表时为防止zookeeper中路径冲突,创建表时ClickHouse存储路径精确到周期更新时间路径为后缀为佳,比如说时间戳也可。

版权所有,ClickHouse需要分区吗?ClickHouse到底有多快?,by:cpp.la

2 Replies to “ClickHouse需要分区吗?ClickHouse到底有多快?”

垃圾佬,带带

大佬,报群名。